Hadassah Drukarch presents at the Fair Medicine and AI conference

At the International Online Conference 'Fair Medicine and Artificial Intelligence' organised by the University of Tübingen (Germany), Hadassah Drukarch, junior researcher at eLaw, gave a presentation on how current algorithmic-based systems may reinforce biases in healthcare. This topic forms part of the research in the field of Diversity and AI conducted with colleagues at eLaw - Bart Custers and Eduard Fosch-Villaronga - and the Creative Intelligence Lab - Tessa verhoef - at Leiden University, and former student on the Advanced LLM in Law and Digital Technologies, Pranav Khanna.

AI in Medicine has evolved dramatically over the past five decades. In the healthcare sector, AI is increasingly being used in biomedical research and also clinical practice, in tasks ranging from automated data collection, to drug discovery, disease diagnosis and automated surgery. In short, the promise of AI in healthcare is that it can help provide safer and more personalized medicine for society in the near future. Although these advances may well entail incredible progress for medicine and healthcare delivery, the introduction and implementation of AI in healthcare raises a variety of ethical, legal, and societal concerns. More research is needed for AI to perform well in the wild, and room for improvement can be found in the area of diversity and inclusion.

Within the context of healthcare provision, the sociocultural dimension of gender plays a significant role in influencing the awareness of a particular disease, the attitudes towards it, the manifestation of disease symptoms, or the interpretation of signs and symptoms of the disease. This sociocultural dimension also affects other essential aspects such as access to healthcare, the doctors' attitudes toward patients, or even pain communication. Still, most algorithms do not consider these aspects and do not account for bias detection.

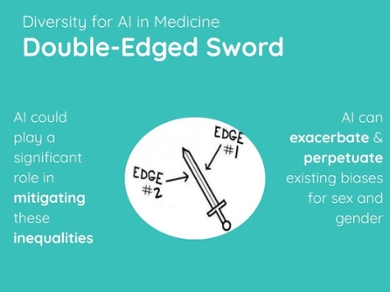

The question is then, can algorithms account for all of these aspects? AI in Medicine can be considered as a sort of confounding "double-edged sword" because, these advances may, on the one hand, exacerbate and perpetuate existing biases for sex and gender, but, at the same time, could play a significant role in mitigating these inequalities. While we may want AI to account for sex and gender differences between individuals because it may lead to improved performance and precise medicine, attention should be paid to the impacts that this may have on (unwanted) discrimination, medical safety, privacy and data protection, and the future of work and training for humans in the healthcare environment.

What the future holds for healthcare automation is thus an increasingly complex interplay between humans and machines where the roles and responsibilities of and between both will inevitably blur. This is important because questions around the consequences of missing the gender and sex dimensions in algorithms that support decision-making processes are particularly poorly understood and often underestimated. History has shown us that this is nothing new. Throughout human history minorities have experienced such forms of treatment too often. In the age of AI, we are yet again faced with similar issues and challenges - we can decide to ignore them or deal with them correctly.

Want to know more? A recorded version of the conference presentation is available below:

Due to the selected cookie settings, we cannot show this video here.

Watch the video on the original website or